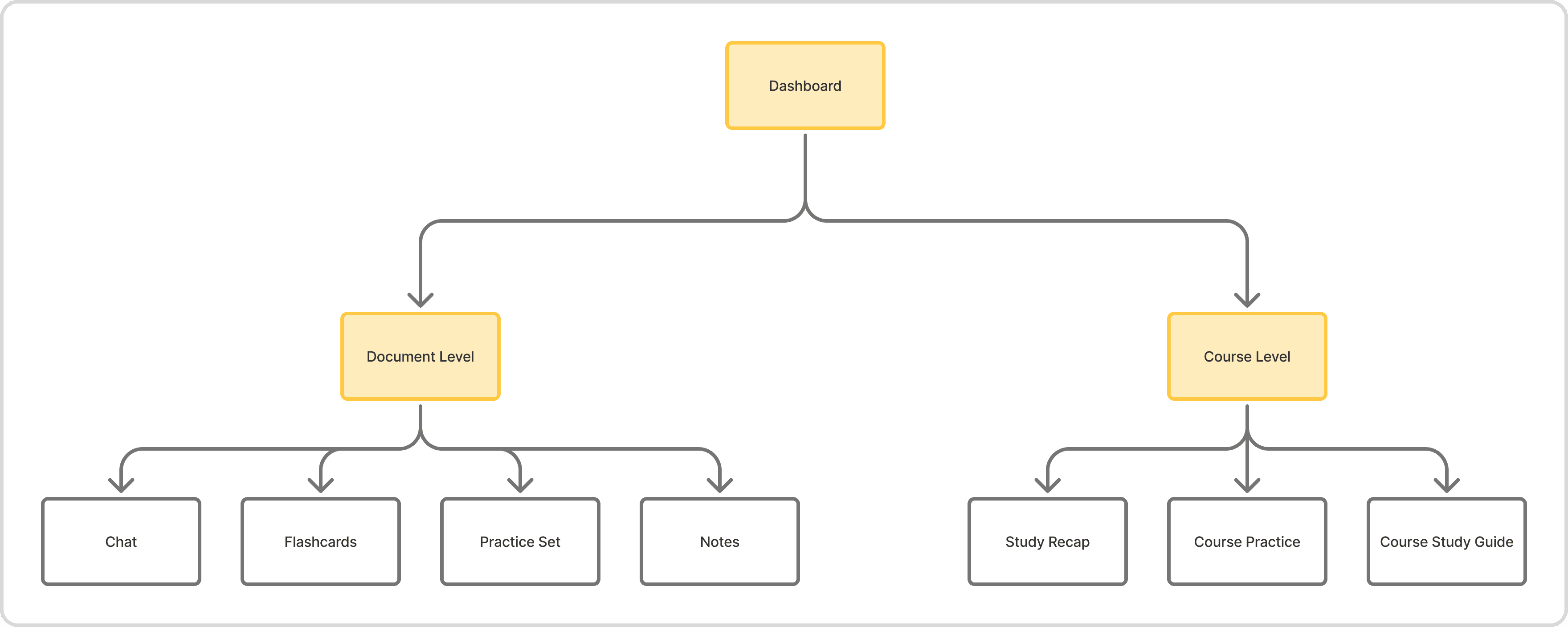

Overview

Type

Internship

Team

2 Designers, 1 PM, CEO

Timeline

Aug 2025 - December 2025

Skills

UX Design, Interface Design, Wireframing, Prototyping, Product Thinking, Usability Testing

Context

Lumeno AI is an innovative learning platform designed to empower students globally by offering accessible, engaging, and personalized study tools, including chat-based tutoring, custom notes, flashcards, and study plans derived from uploaded syllabi.

As a product designer, I was responsible for defining key features across web and mobile applications, collaborating directly with the team to deliver user-centred solutions that improved engagement and adoption.

The Challenge

Users didn’t discover the existing features and didn’t see Lumeno as a solution

Features were scattered, and students had trouble both navigating the app and recognizing what set Lumeno apart in the market.

3 insights from interviews

Poor Information Architecture

Confusing organization made it difficult for students to discover the app’s full potential

Lack of Differentiation

Users described Lumeno as “just another AI study app.” The lack of unique offering was apparent

Lack of Personalization

Students didn’t feel the product adapted to their individual needs and situations

The Solution

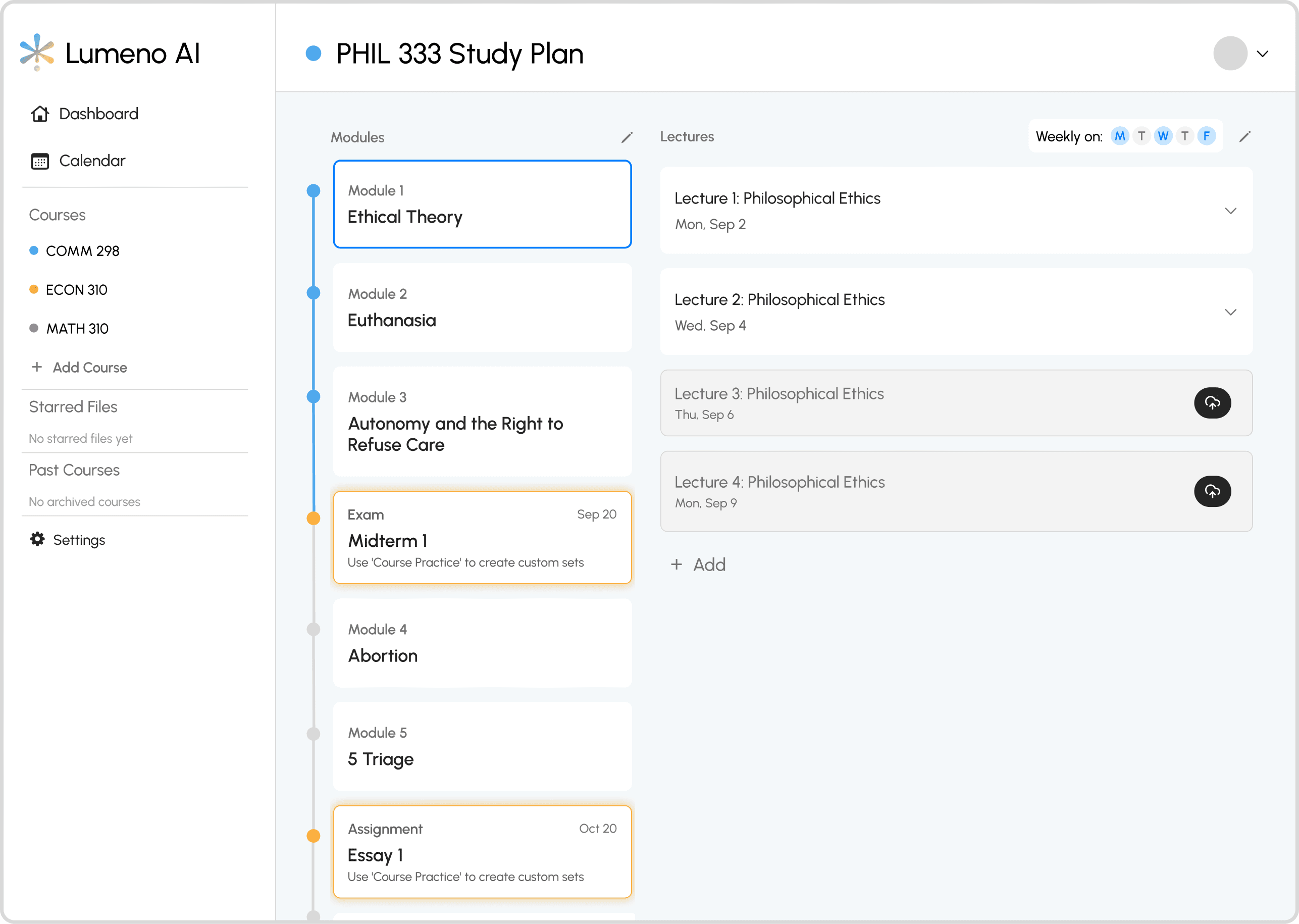

Study Plan From a Single Syllabus Upload

User research revealed that students spend a lot of time at the start of each term reading lengthy syllabi and manually organizing coursework and assessments in tools like Notion. To simplify this process, the Study Plan feature lets users upload their syllabus to generate an instant, structured breakdown of modules, lectures, and key assessments.

Upload syllabus -> System auto-parses for modules, lectures and assessments -> One button per lecture.

Research and Concepts

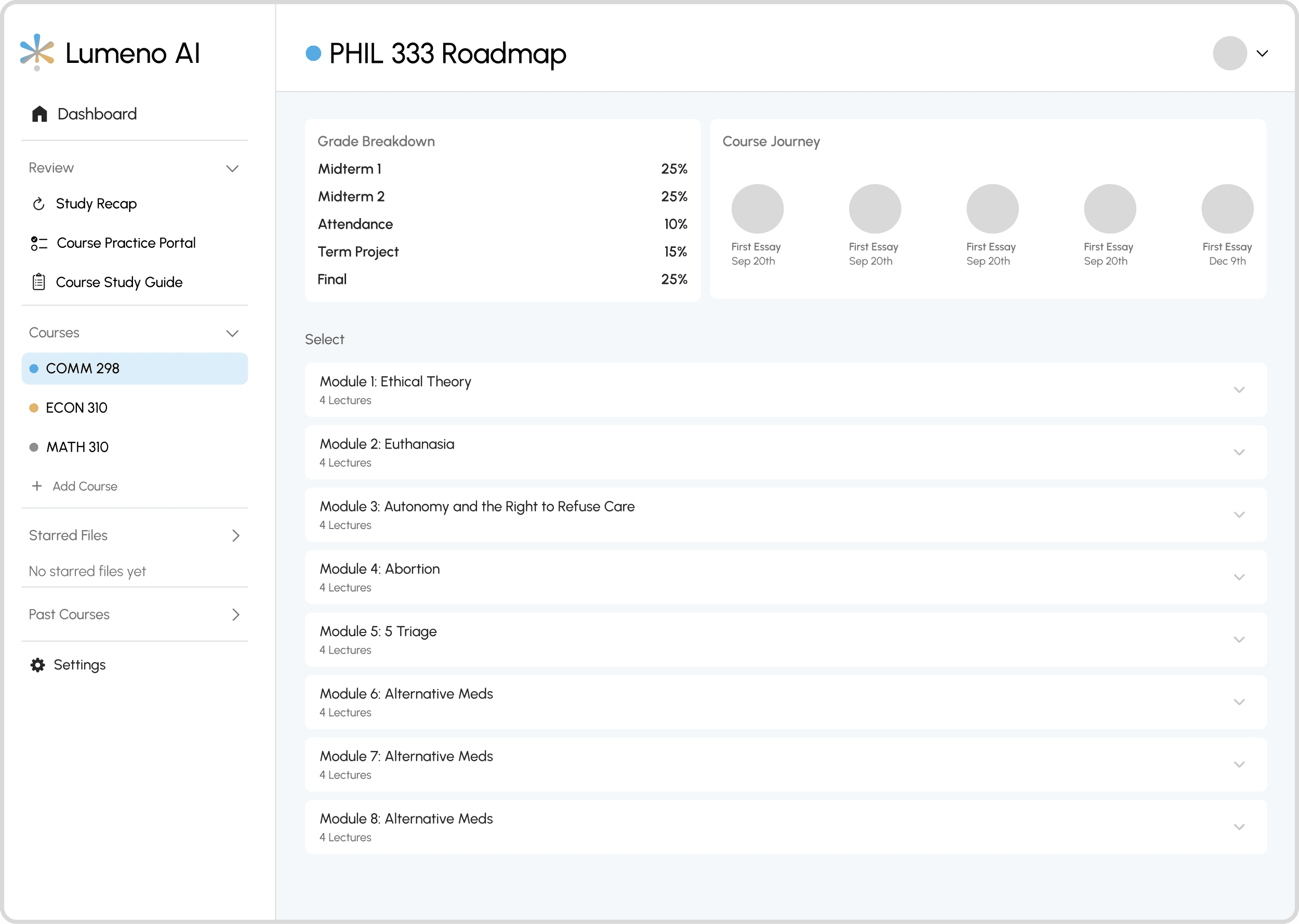

Concept 1: Module-Based Structure

This design featured a file management inspired system that had the lectures nested inside modules. Users liked the structural hierarchy but the nested lectures and unclear call to action crated confusion and overwhelm.

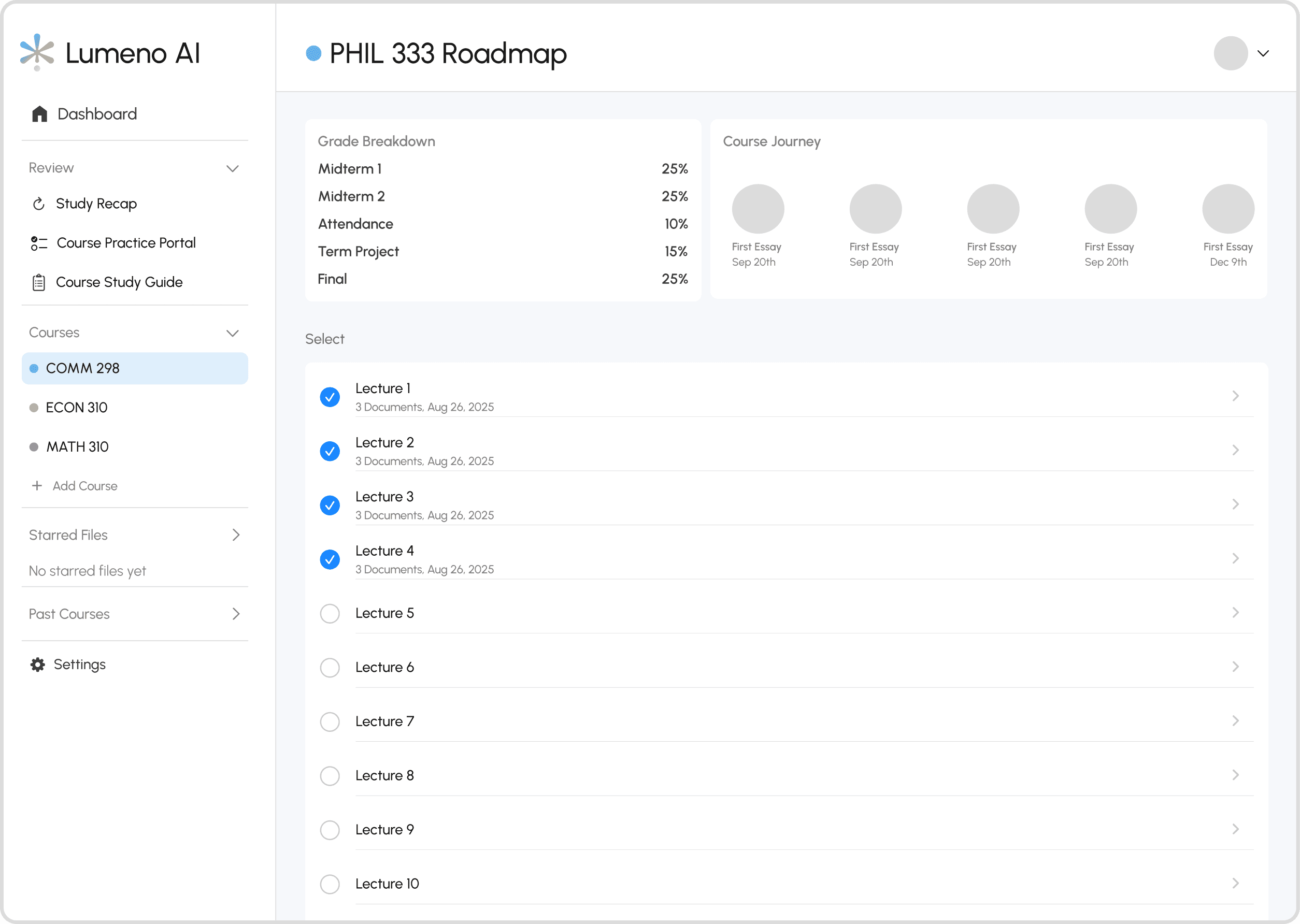

Concept 2: Lecture-Based List

While this design eliminated nested lectures, only 10% of users preferred this. The check box UI that was selected once a user uploads a document to it was unclear and the long scrolls through 24-36 lectures in a term added friction.

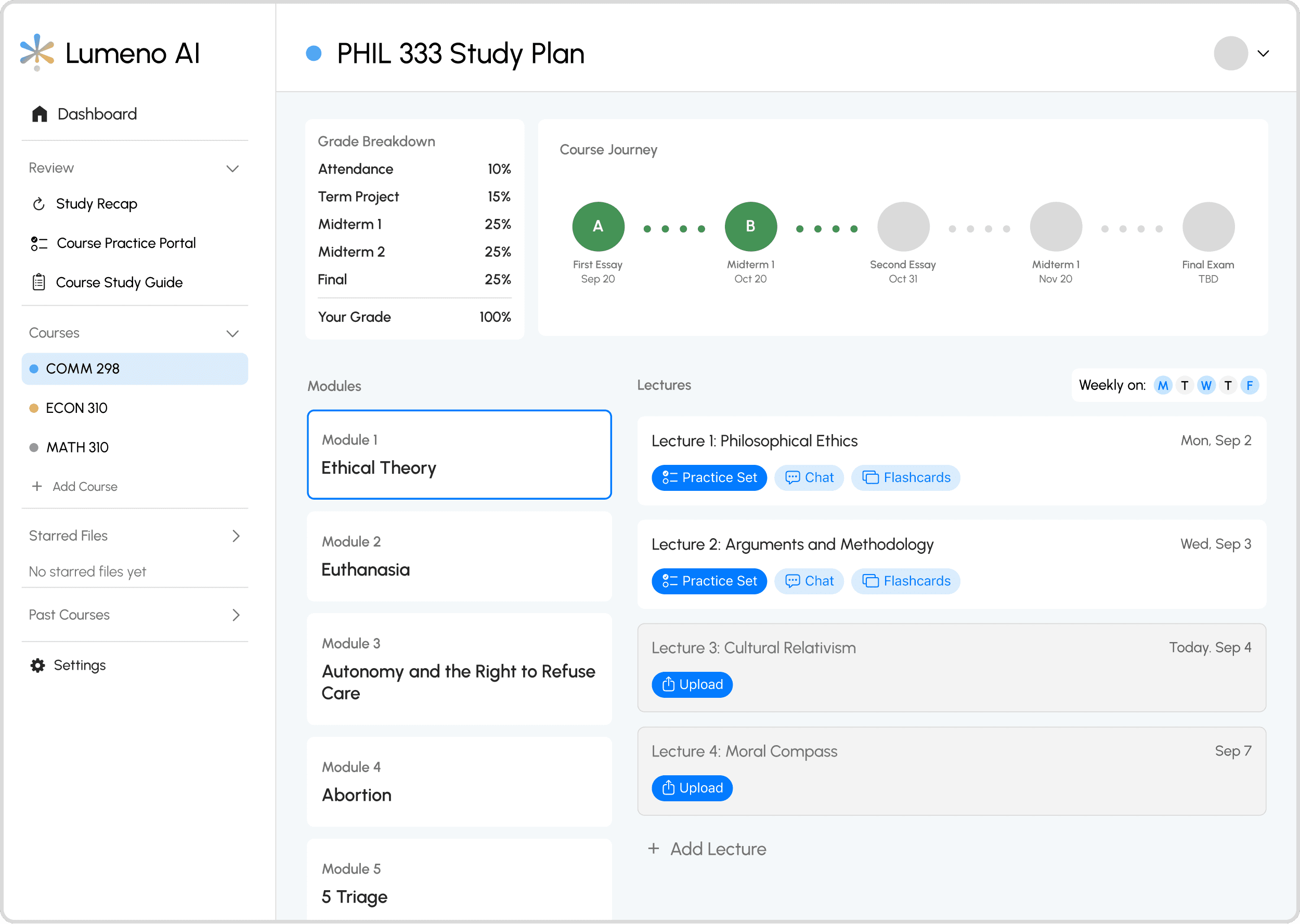

Concept 3: Split View

This design with a modules menu on the right and lectures on the left felt familiar to users but still felt overcomplicated with too many buttons. The grade tracking features on the top was also seen as taking up too much space while staying empty most of the term.

Winner - Split View (v2)

The final design incorporated a vertical progress bar that addressed the concerns with the grade breakdown and too many buttons. Little did I know that while this design felt promising and full of value to the user I was wrong about a few assumptions that I had made…

User Response to Study Plan

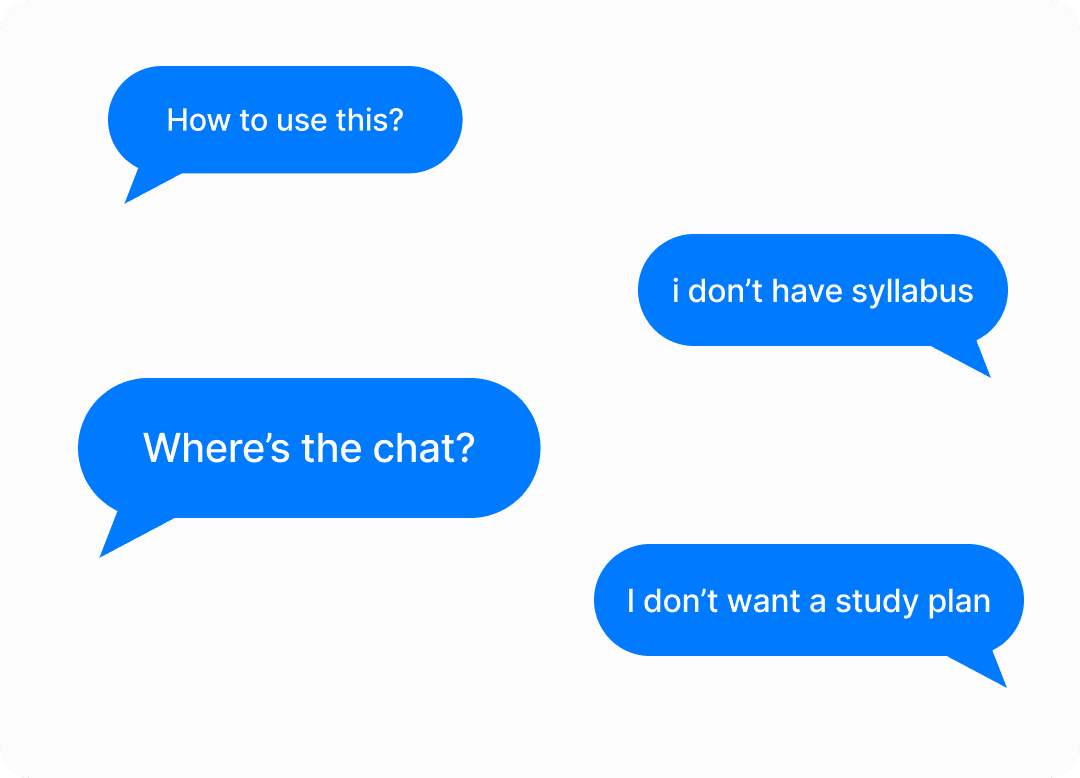

Problem: Users Were Overwhelmed and Hesitant to Organize Upfront.

The Study Plan was added to onboarding, but it made the flow longer and caused major drop-offs. Users were confused and flooded the support chat with questions, showing clear frustration. They also weren’t familiar with the feature and preferred AI tools that let them start using the app right away without setup.

Feature Support

Users who committed to uploading their syllabus found strong value in the Study Plan, viewing it as a standout feature compared to other apps. It was especially popular among first-year students who wanted structure and easy organization as they adjusted to university life. This validated the idea but showed the need to make it optional and refine the flow to appeal to a broader audience and reduce drop-offs.

The Pivot

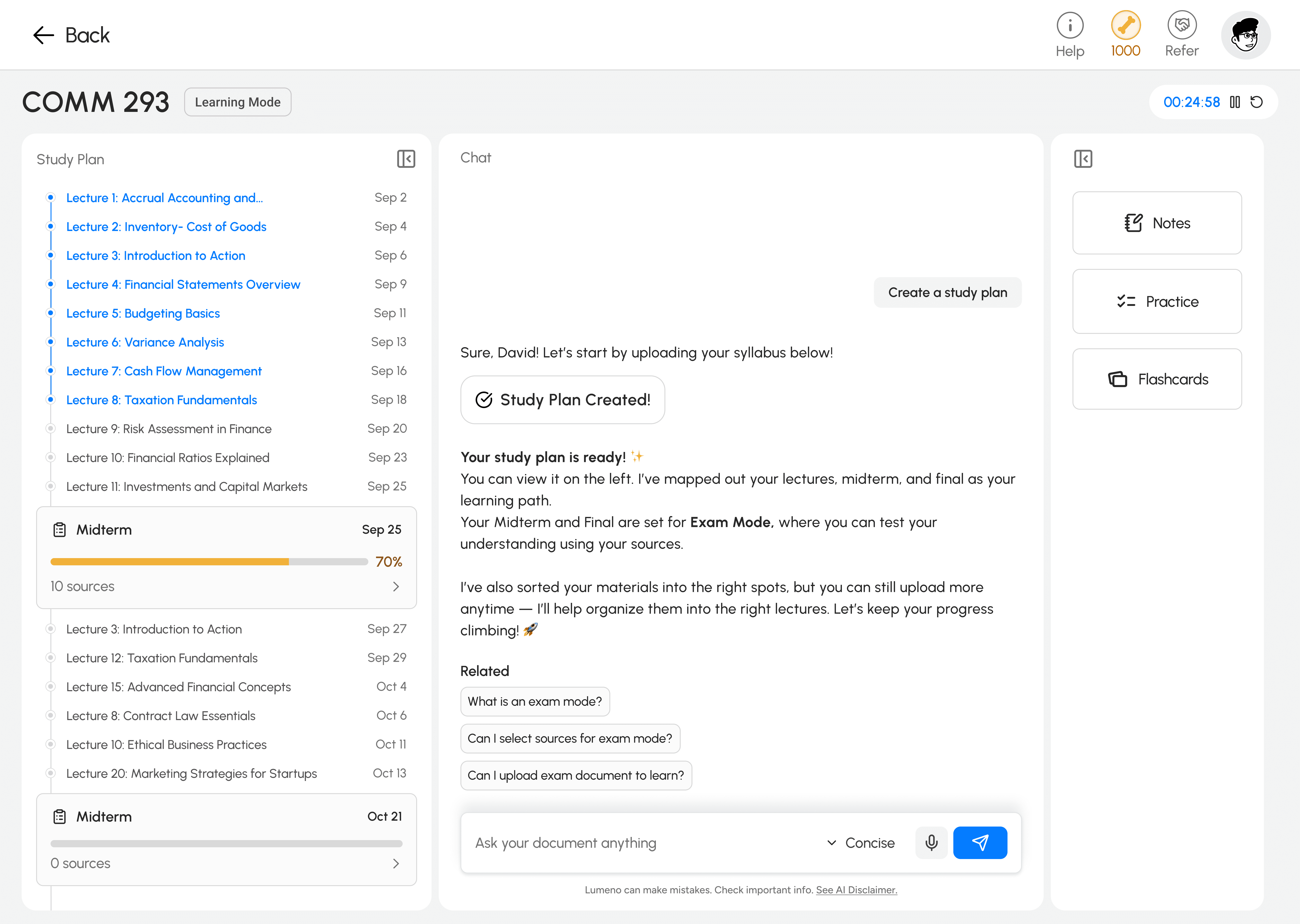

Pivot: Integration with Learning Interface

The Study Plan was moved to the learning feature itself, making it optional. Now, students jump straight to chat with Study Plan available on demand.

Pivot: Aligning with Users’ Existing Study Habits

The previous Study Plan assumed users studied lecture by lecture, forcing them to upload documents for each class and progress one at a time. However, interviews and behavioral insights revealed most students actually study around assessments. The redesign reflected this shift by allowing users to upload materials directly to each assessment section and study more efficiently from there.

The Impact

Reflection

The Gap Between Testing and Reality

I was confident in the split-view design, and usability tests and interviews seemed to prove it worked. But once we launched, users completely ignored it. That gap between test results and real behavior taught me something important: testing confirms usability, not desirability. I had validated that users could use the feature, but not that they wanted to organize first when opening the app, and that is a completely different insight.

The Type of Testing Matters

I tested three concepts through usability sessions, which gave helpful feedback. But if I had run A/B tests after launch, I would have caught the drop-off pattern early. I should have tested the Study Plan with 20% of users to track drop-offs, adoption, and time-to-study. It was not that I did not value testing; I just tested the wrong thing. I checked usability instead of whether the flow fit how students actually study.

Designing for Reality, Not Ideals

I designed Study Plan around how I thought students should study: organized and linear. In reality, they studied reactively, driven by deadlines and immediate problems. Realizing this shifted my approach from building ideal workflows to designing for real behavior, which turned failure into success.